There are two topics herein; the first is on reusability with Microsoft™ – powerpint in particular, and the second on initiating a reusable asset program.

In one of my recent “previous lives”, there was a pressing need to produce reusable intellectual capital (IC) to permit our consulting and coaching practice to grow. The shame of it was, we just couldn’t do it. Because of time constraints (gotta be productive!! gotta be chargeable!!) we were unable to effectively codify what we learned so that subsequent practitioners could build on that learning. We also tended to put everything into PowerPoint™ [1]. (I call it powerpint, I hope that doesn’t get me in trouble with Microsoft trademark enforcement!)

As it transpires, it appears to be very difficult to develop effective reuse patterns for powerpint; we didn’t find a way. The elements of reuse are single slides or small groups of slides, and the elements of configuration management are larger and essentially indivisible groups of slides called decks. This impedance mismatch makes a simple reuse task boringly and tediously difficult. (And it’s much more difficult when the only “asset repository” your organization makes available is email – s-m-h.) I’ve heard there is a composition manager available to pull the current versions of single and small collections of slides into “the next deck” but I’ve never been in an organization that uses this composition manager, if it even exists. I suppose it would be possible to code it up in Visual Basic™, but I’ve not seen that done.

A conclusion is, yes, graphics are important, but use them effectively and if you are building lots of pictures, consider changing your paradigm from glossy powerpint to meaningful semantics with a genuine model-building application whose diagrams are views into the underlying, all-important semantic model. When I was at IBM, our original go-to for this was Rational Rose; later it was Rational Software Architect. I’ve not found a free UML modeling tool that will do this job with any clarity but all the ones you pay for probably will do it. Unfortunately, this results in the need for a considerable investment in training time and tools. I’ll consider this aspect more carefully another time. Meanwhile …

Developing Reusable IC while Engaged

The best way to develop reusable capital is for its immediate use in the context of one’s current consulting/coaching engagement. One is on the ground, executing, and realizes the need for something, for the benefit of the client. Sometimes it’s a new way of doing business. Sometimes it is an artifact or collection thereof that can be used to facilitate some processes. Usually, it’s both, actually. You build what you need, you try it out and experiment with the client or customer, you refine it based on that experience, but not too much to avoid gold-plating[2], and then you submit it to the asset repository. And there it sits, waiting for the next need.

The key to its eventual excellence is its reuse, and the first criterion for that is someone who needs it actually finds it in the repository – we’ll get to that in a minute. Effective reuse does not always look like, “Oh, here! This is perfect! This is exactly what I need!” This is often called pulling the asset off the shelf. It’s rare, but it’s nice when it happens because you’ve found something that will save you work. More often though, effective reuse looks like this: “This might work. I’ll make some refinements to suit my client’s situation and then give it a try.” Once it’s been refined and used, it is reviewed and possibly refined again – but once again not too much to avoid gold-plating2 – and placed back into the asset repository as an update or new version for the next consultant/coach to use.

Note that now, this asset is useful in two different engagement contexts. When a third context arises, it is likely to be better fit-for-purpose and require less work to get it to the point where it is usable in that new context. (It is important to ensure that you don’t break it for purposes of previous contexts to which it has already been applied.) In the fourth context, it’ll be even better. It might never be reusable just by pulling it off the shelf and starting it up. Some small amount of configuration or customization might be required every single time it is used. But it is better every time due to the continuous improvement applied to it, and due to the time and especially thought savings associated with its reuse.

Finding the Asset

I learned the above Build-while-Executing pattern for building and maintaining quality reusable engagement assets while I was at IBM. Most of what I learned came from the gentleman who led the small staff of, well, I’ll call them librarians, who made the asset repository sing; his name is Darrel Rader. Believe me, I consider the role name librarian a compliment. If I recall correctly, our consulting staff was about 500 worldwide and his staff numbered maybe 5. Maintaining the collection of reusable assets in a suitable repository – indeed a repository designed and developed by a team at IBM Rational led by another mentor Grant Larsen, for expressly this purpose – was hard work. This investment paid off extremely well. Hundreds of engagement assets were pioneered and then refined in engagement after engagement, and it served to save consultants lots of time and improved engagement quality significantly as time passed. I can only hope that the repository still exists and is maintained within IBM. I’m afraid I don’t know.

Darrel spent an impressive amount of time on Search and Find. It turns out, for Darrel and his crew, the assets were the easy part: the consultants built them on their own and were happy to do so! On submission his crew would review an asset, possibly suggest minor improvements, and then categorize, package and tag the asset within the tool, called Rational Asset Manager (RAM). This was really important, because if it was poorly packaged it would be hard to reuse, and if it was poorly tagged, no one would ever find it.

Packaging

Packaging proved to be extremely important in order to avoid duplication of artifacts. It is worth pausing here to provide a few definitions:

Artifact: a document, for example a powerpint deck, a word document, a spreadsheet, a text file, a model file. A single thing. If a group of files belonged together tightly, then it might be a zip file containing those in that group. But an artifact is a single thing. An artifact can be a reference, that is perhaps a URL or some other reference which points to the physical file located in some other repository like Jive™ or Sharepoint™ or git™. And finally, an artifact should be (or become soon) a formal member of one or more assets. Artifacts can be tagged.

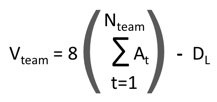

Asset: a governed and audited collection of related artifacts with a declared purpose. We endow the asset with a lifecycle to promote an asset-based form of governance, that is we make decisions on how the asset can/should be used by reviewing the quality of the asset when or after work is done on the asset (not just on whether the work is “done”). We enable this by endowing the asset with a lifecycle (state machine) – probably derived from its type, and a state within that lifecycle. As mentioned above, an artifact might be found in more than one asset; as such, it should be equally usable when sought by practitioners looking to use any asset which references that artifact. Assets must be tagged and categorized; one must be able to find them when they are a possible contributor to the solution to a consulting/coaching issue.

The flexibility implied by the above packaging implies that there is work to maintain the packaging. A category hierarchy must exist, but much more importantly, a tagging taxonomy must exist. We found as this capability was being developed at IBM that we quickly lost control of tags. We solved this problem by enforcing that tags be part of a managed tag taxonomy. If you needed a tag that wasn’t in the taxonomy, you went to Darrel’s team, and either they would find you the tag you needed, or they would invent one with your concurrence and place it into the taxonomy, and finally tag your asset with that tag.

Searching and Finding

The taxonomy was the single most important element of the librarians’ efforts making the reusable asset repository useful in the long term. Put succinctly, if you were looking for something, you consulted the taxonomy, chose your search terms, did your search, and behold … assets worthy of your consideration for use/reuse.

Discipline and Evangelism

A most important role of the librarians was evangelism. Encouraging practitioners to build and submit, or find and refine, assets in conjunction with their work never stopped. This would not have worked had the practitioners (coaches and consultants) lacked permission and the resources to build, refine, and share ownership over our reusable assets. For example, if one is continuously “full time” on engagements, asset development rarely occurs. It was also common for the librarians to review a proposed submission and suggest combining it with a previous submission or two to make a refined asset rather than a new asset. It remained difficult to find what you were searching for even with all the effort on tagging. But it was possible and worth the effort.

Summary

The above constitutes the beginnings of a genuine intellectual capital development and leveraging strategy, backed up by an ongoing investment in growing capability, both in tools (repository) and labor (librarians and consultants). The result was a robust reusable asset management program that yielded higher quality consulting and continuous improvement. It also made IBM a joy to work at, because your work was acknowledged and supported. Indeed it was specifically measured by reuse! and rewards acknowledging contributions were possible based on the measurements. Indeed my friends, those were the days.

[1] with apologies to Microsoft™ because PowerPoint isn’t really a bad product, just a horribly misused one. I do have a friend who says “we are all subject to the vast mediocrity of Microsoft”, but that isn’t quite true, although it does often require organizational buy-in. If we have that, we can rise above it with some effort, and it’s often quite valuable to do so.

[2] Gold-plating, see https://densmore.lindenbaum.us/dysfunction/rock-engineering/